Building TalkBerry: Lessons from a Voice-First Language App

📱 Building TalkBerry — A Voice-Based Language Learning App

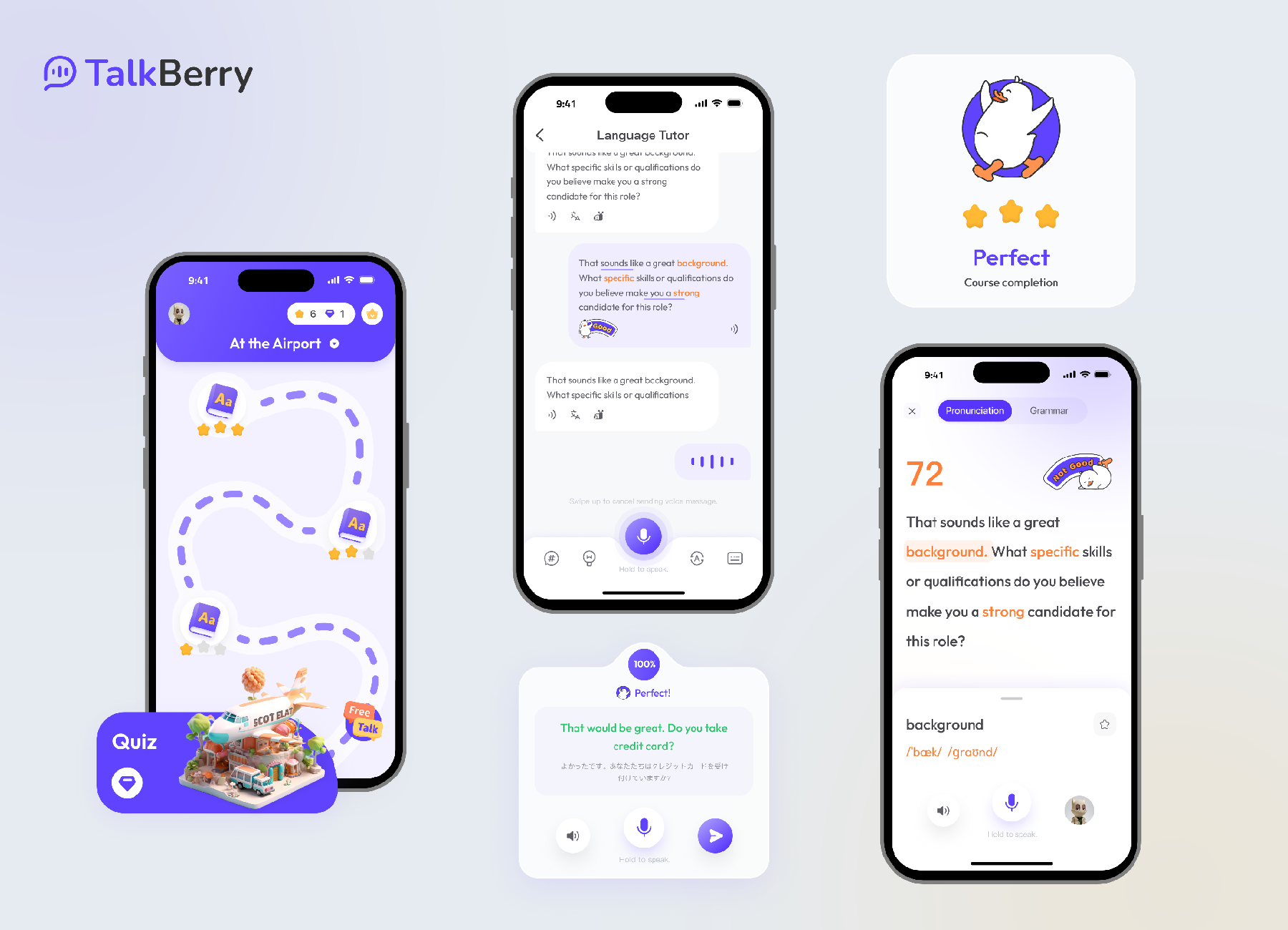

I was part of the team that developed the TalkBerry mobile app, a voice-first language learning tool that helps users practice spoken English through realistic, AI-powered conversations.

TalkBerry began as a Chrome extension and grew rapidly—crossing 30,000 users in the first three months. Our goal with the mobile version was to bring the same low-friction, immersive experience to a broader audience, while improving the feedback loop, session fluidity, and accessibility on mobile devices.

This post reflects on the development process and serves as a starting point for discussing technical challenges in building real-time interactive applications.

🧠 What Makes TalkBerry Work

TalkBerry tries to provide a gamified and trackable experience in your journey to practice spoken English. It puts users in real-life situations and invites them to have real conversations. Each lesson has a high-level objective, and the user works with the AI—acting like a simulated instructor—to achieve that goal. The duck is the user’s friendly guide throughout the journey.

Within each lesson, TalkBerry is simple: you open the app, start speaking, the AI responds, and the conversation continues until a goal is achieved within the chat. This was the most technically and state-management–intensive part of the app.

From a product standpoint, TalkBerry feels simple—but under the hood, that simplicity depends on careful handling of asynchronous voice input, dialogue state, and user pacing.

What we focused on:

-

Conversational realism

Users should feel like they’re talking to a person, not pinging an API. We tuned pauses, turn-taking, and fallback prompts to avoid abrupt transitions. -

Give in-context tips and guides

The app informs users about pronunciation and provides additional exposure to language nuances through subtle, supportive guidance. -

Feedback without friction

Instead of grammar drills, we surface corrections subtly—repeating misused words properly or highlighting missed vocabulary in follow-ups.

🗣 Voice UX = Timing + Recovery

Voice interfaces demand a different kind of interaction design. It’s not just about “what the AI says” but when, how fast, and what happens if it doesn’t understand.

We had to solve:

- Mic handling across platforms (foreground/background transitions, call interruptions)

- Managing API resources for TTS and STT—optimizing usage by pre-generating some audio when possible

- Using AI to convey “busy” states in the app and smoothen the UX when processing or loading is underway

These constraints shaped many of our tech decisions, especially around streamlining voice-to-text and orchestrating a coherent, low-latency experience.

⚙️ Stack Sketch (Mobile)

While I can’t share full infra details, here’s the rough architecture:

- Frontend: React Native + native mic + TTS integrations

- Voice Input: Speech-to-text (cloud and caching)

- AI Layer: Prompt-engineered language model (OpenAI), with light session memory

- Feedback: Correction engine that uses AI results, user voices/accents, orchestrates multiple grammar APIs, and visually aligns correct/incorrect/suggested words

A lot of time went into orchestrating flow: when to listen, when to speak, how to wait, handle multiple languages, and integrate with the gamified experience.

🧪 Why I Found This Interesting

This project gave me a deeper appreciation for:

- Voice interface edge cases—silent users, accents, interruptions, ambient noise

- Making AI feel interactive, not just reactive

- The subtle art of designing feedback that’s helpful but not discouraging

- UI that adapts to user experience—not just forms and REST, but interfaces influenced by how users engage with the app

- Mechanisms to reduce cognitive overload in apps with inherent complexity

- The satisfaction of seeing a UI work well and reflect the user’s progress in real time

It also raised new questions I’d like to explore in future builds:

- Can we retain lightweight memory across conversations without full transcript storage?

- How do we blend goal-directed learning (e.g., preparing for a test) with freeform chatting?

- What would a collaborative voice task with two learners + an AI facilitator look like?

🧭 TalkBerry as a Reference

For others working on interactive apps—especially those using voice or AI—TalkBerry is a useful reference in:

- Designing for flow, not features

- Building lightweight MVPs (like the original extension) to validate behavior early

- Prioritizing response timing and recovery, not just content quality

Credit to the awesome team at Userly Labs for making it happen.